Introduction

For application developers and architects then the development landscape is more complex and pressured today than ever before. On premise, off premise, public cloud, private cloud, agility, DevOps all add pressure to decisions whilst none take away the need to bring solutions to market quickly and, of course, minimise costs.

How many times has the architecture and solution components been chosen before the prime time end game has been realised, only to find the solution doesn’t match the needs and the project needs a redesign or rewrite to achieve the business goals. At this point have costs and the time to market actually been minimised? Would or could an alternative set of decisions made better sense?

As solutions have been rolled out has database “sprawl” occurred, which adds DBA management and complexity as well as license and support costs to the database environment? At a project level are things viewed going well but at the higher levels of IT management unexpected redesign, infrastructure, licensing and support costs seem to be getting out of control as the solution starts to move “prime time”?

This short paper seeks to compare some of the choices available in the lifecycle of application databases and identify where HPE could provide a solutions for reducing complexity, constraining costs and providing flexibility without compromising capability.

Challenges facing IT

The database marketplace has become much more varied over the past few years. Generic databases used to be primarily relational or hierarchical in nature. As open source software has started to become common place so too have specialised databases.

Public Cloud offerings are often seen using open source and specialised databases (e.g. columnar, NoSQL and object oriented) which make an attractive proposition for proof of concepts and pilots. Cost containment and fast time to market is seen as a pre-requisite for such developments.

Modern application design is often built around micro-services and statelessness so they can scale out and have re-use. They require the right data store for the right task to be selected (in-memory cache, columnar, NOSQL, RDBMS) but add complexity due to the management and orchestration of components. Automation using common tools and DevOps is seen as key to agility and reuse.

The database management systems themselves, as indicated above, need to be chosen carefully for the task. With not only Relational Database Management Systems but Columnar Databases (big data for text, unstructured graphic and network database), Object Oriented Database and the concept of both Structured Query Language and NoSQL then understanding the implications of choice now and for the future of the application is paramount.

NoSQL (e.g. Hadoop, SAP Hana, Cloudera, Cassandra and MongoDB) is often seen as giving huge benefits in terms of agility because there is no database schema (or often schema later) in the design and proof of concept phase with “eventual” database consistency and no standardised query language. There is a significant learning curve for use given there are no common architectures or APIs. Choosing such an architecture for main stream, high velocity and mission critical solutions can involve large amounts of “plumbing” being required for bespoke transactional consistency. The lack of a schema and also a standard query language makes it difficult to share data with other applications and use query tools for ad-hoc and management information. What was seen as “agile” can now be a barrier to moving forward quickly into “prime time” as everything needs hand crafting or a major re-design or even DBMS migration. Object Oriented databases (e.g. VBbase and Informix) face some of the same issues since significant re-skilling is required, consistency management tends to be through bespoke plumbing in the application but integration into Java or C++ environments using open source tools such as Ruby, Delphi and Python is good.

Interchangeability between DBMS, should the wrong choice for an application be made, can be highly complex and costly in terms of redesign and coding. What seemed a good, low cost choice for a proof of concept might cause major delays, skill shortages, re-coding and costs to migrate into a prime-time production architecture. Underlying DBMS infrastructure requirements may also change.

Scalability limits can cause significant re-architecting and management overheads as well as skill pressures even when using generic merchant databases. Scalability can include not only database capacity but also the velocity of transactions being consumed. Scalability can impact hardware architecture to scale-up or scale-out as well as the exploitation of data replica to handle not only velocity but the sharing of data across applications. A proliferation of servers and databases are often added to handle scale causing additional licensing and security increases overheads and costs. The use of multiple databases in replication for multiple application data access results in decentralised management complexity and this administration overhead along with licensing, support cost increases suddenly start to consume large proportions of IT budgets stifling innovation.

Availability requirements for mission critical prime-time use only add to the architectural, management and licensing cost issues and then start to lead to compromises between scalability and availability. Examples of how architecture and licensing as well as administration skills and infrastructure change can been seen in an Oracle environment; as application started with Standard Edition may have to more to Enterprise Edition to support replica needs and then start to move into Real Application Clusters (RAC) for more scalability and availability with specialist hardware and storage licensing being needed should Exadata be determined as the ultimate way forward to handle scale. Even though a set of common APIs, transactional consistency and structured query language (SQL) is supported across the product sets the migration between them means application and database as well as infrastructure and skill changes for the development, DBA and infrastructure teams. This adds complexity and cost as well as reduces agility even though many of underlying base tools may stay the same.

RDBMS what to look for?

Specialised databases bring value to specialised needs but for solutions where the business envisage the need to rapidly scale, share data amongst applications and to potentially support mission critical SLAs then choosing the correct solutions at the start of the lifecycle will often mean, where structured data is involved, that a generic RDBMS is the best solution.

What is clear is that standardised APIs and the Structured Query Language means that migration to an alternative RDBMS (even within the same family) is a much simpler migration as the application moves from proof of concept, to pilot to main stream production than migration from specialised databases such as NoSQL or Object Oriented.

Caution should therefore be exercised when undertaking proof of concepts in a public cloud environment that the freely available tools do not lead the development down a blind alley due to perceived low start-up costs. The end game for the application and the business vision needs firmly establishing and considered when designing even at proof of concept level since this will end up playing a key part in agility, flexibility and costs. The key here goes back to the right store for the right task.

When considering an RDBMS some of the capabilities for a modern environment include:

- Database Services

- Integration into DevOps environments

- Multi-tenancy

- Scalability to support growth

- Highly Available to support mission critical deployments

- Simplicity to consistently meet SLAs

- Compatibility to ease portability

Vendors have offerings generally that will cover some of these capabilities but this will often either involve licensing and support step changes or a modified database and infrastructure architecture to provision sets of features. So what do each of these features support and why is it valuable?

Database Services can cover a number of features but essentially these are of most value when associated with development and testing. Here APIs aligned to database services allow automated and rapid deployment of a database instance from a self-service environment and equally should also place resources consumed back into a “pool” when finished with. This frees up DBA, security and other infrastructure management personnel from more menial tasks in to assisting business initiatives and innovation.

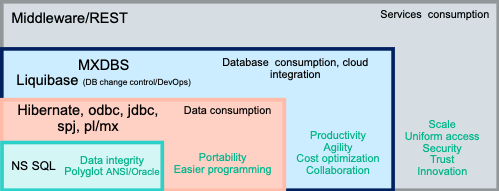

DevOps Integration supports automation but throughout the lifecycle of an application. Part of this integration should cover the ability to manage change through source control such as database schema, tables and indexes which can be managed through change control tools and shared amongst development teams as well as having automated rollback facilities. A good example of such a change control tool in the database world is Liquibase.

Multi-tenancy supports the ability to install multiple database instances in a shared environment. This provides for efficiencies to be exploited and can be an architecture exploited for cloud environments. The instances, though sharing an environment, have isolation from one another. Licensing and support models do not necessarily change just because an instance of a database uses multitenancy and therefore this feature is often about operational utilisation efficiency.

Scalability is simplistically the ability of an environment to support growth. When looking at databases there are several dynamics to be considered.

Pure database size can be critical since items such as storage methodologies and also the amount of indexes needed to support query execution plans can impact the logical capacity of a database instance. Scalability will also be associated with the response times required and the velocity of data changes as concurrency and locking strategies all have an impact of scale. Managing audit for being able to keep transactional and database consistency also become more challenging as data velocity increases.

The ability of a database to undertake work in parallel can also impact response times and hence impact whether SLAs can be achieved. This may or may not be part of a standard licensing scheme, impacting operational costs.

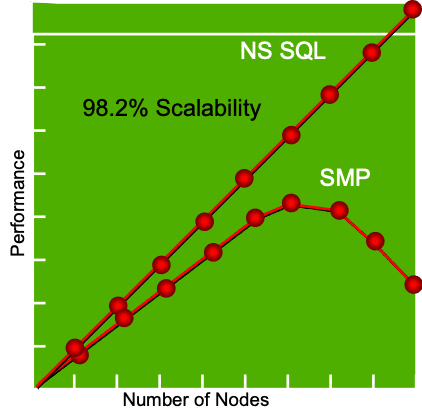

The overall infrastructure and underlying architecture affects scalability. SMP (symmetric multi-processing) typically demands a scale-up approach using more powerful CPUs since as each additional CPU (node) is added to an SMP environment there is a diminishing return in terms of additional throughput – even if parallel execution is available. The effect of this is that buying strategies are typically to deploy a large environment and fill it up. The architecture (and possibly application design and components) may have to be swapped out to support growth SLAs. This impacts licensing, support and infrastructure. Data sharing amongst applications can exacerbate this scaling issue making replica copies for additional use an often used solution. This adds costs and complexity to the overall architecture and management required. Exadata is a set of specialist hardware with specialised low latency fast fabric to assist SMP scaling and lock management. However, it needs additional storage licensing, non-standard hardware and different Oracle licensing to support it. It therefore demands a migration from typical Oracle environments and therefore adds time, cost and complexity.

MPP (massively parallel processing), which is a more modern approach favoured by database technologies such as SAP Hana and Hadoop, uses loosely coupled parallel technologies where scale-out is the dominant feature. This means additional nodes do not see the diminishing throughput returns as in an SMP environment but these can also make use of a more powerful CPU for individual nodes. Database technologies and especially relation database management systems traditionally favour SMP. Typically because of the more linear nature of scaling in MPP then deployment is for only what is needed at that time for the SLA, making costs more granular.

Real Application Clusters have been an additional solution to resolve some of the limitations of SMP designs. This distributes workload over multiple nodes and can potentially also help with availability. Shared storage systems as well as special RAC licensing is required here. Scalability is limited due to the contention demanded by the flushing of cache from the Distributed Lock Manager methodology employed to ensure lock consistency and data integrity employed across the nodes in a RAC cluster. Typically throughput falls off after 4 nodes are employed for RAC even though fast fabrics have been deployed.

High Availability is often a requirement as applications move into prime-time production. More and more businesses, whether using traditional IT or cloud-based solutions, are running solutions near 24×7. Databases have to respond to this preventing unscheduled downtime and managing scheduled downtime and housekeeping carefully. Irrespective of whether applications are “stateless” or not the database is the “safe store” for the current state of the business whether it be stock, orders, payments or accounts.

In order for a business to safely transact, any change in the database entity values has to be able to be recorded as this is a key part of maintaining ACID (atomicity, consistency, isolation and durability) properties of a database for data integrity. For most relational databases introducing high availability is both an architectural and a license enhancement requirement. It requires additional DBA skills, cluster aware software and enhancements to the underlying infrastructure. By its very nature of being a change and enhancement to the original architecture and components High Availability increases complexity. Complexity often increases management overheads and introduces risk; operational costs increase as a result of complexity.

Clustering is the typical SMP architecture to support high availability. It requires two sets of server infrastructures, a shared storage subsystem, clustering software and a cluster aware database. One servers is “active” and the other is “standby”. Despite a dual server configuration (irrespective of how many CPUs per server) then the architecture only supports processing in 50% of the total infrastructure deployed, a high overhead. When comparing this to a fault tolerant architecture based upon an MPP design then there is very little additional overhead for availability since only the failed CPU’s (node) workload has to be assumed by the surviving nodes. On a 4 CPU (node) system this is 25%, 8 Node is 12.5% and 16 node it is 6.25% i.e. lower additional capacity cost for availability.

Not only is the cost of adding HA clustering high to add availability but the time to failover can be considerable, especially at high data velocities, since the database has to marry committed transactions with database changes to maintain data integrity consistency. This stalls the application and is noticeable outage to the users as it can take many minutes in some cases. With fault tolerance, the very nature of fault tolerant processes and check pointing means that the backup takes over in seconds with no perceivable impact to the users.

Simplicity is around being able to start small and grow with business requirements proving the availability to meet SLAs with minimal redesign and re-architecting as well as having an underlying hardware and software infrastructure that does not need re-integrating and retuning every time a software version has to be changed. Simplicity involves technical and commercial challenges since consistency allows solutions to meet known business goals without retraining, re-architecting, migrating, redeploying or relicensing and hence costs and SLAs should be predictable.

In 1970 Edsger W. Dijkstra (UT Austin) wrote “simplicity is prerequisite for reliability” and therefore should be a mantra when looking at the always on, high growth IT environments demanded by business.

When looking at Oracle, as an example, it is easy to see the complexity for database capability, functions and licensing which customers have to navigate through:

- Oracle Standard Edition – basic database management features for small to medium deployments

- Oracle Enterprise Edition – advanced replication, failover including data guard, online index rebuilds, materialised views, parallel query/index, incremental backup/recovery, tablespace recovery point, multi-tenant

- Oracle Partitioning (for large databases especially over 100GB)

- Oracle RAC high availability and scalability enhancement

- Oracle Exadata for extreme scalability with specialised hardware and additional storage licensing

Moving between these offerings is a considered option which requires additional skills, hardware infrastructure, licensing and support options as well as migration requirements.

Compatibility and Portability are areas that help prevent vendor lock-in and enhance the ability to move applications and databases between technologies. From a data access layer then JDBC and ODBC are common connection methodologies to databases. Relational DBMS vendors often add more proprietary functions to ANSI SQL standards and these can make migrations more complex and time consuming. Non-standard features include data types and built in functions but even more problematic can be procedural languages used with applications to access the database. Usage of such proprietary features can sometimes mean that as the business needs and SLAs change it is difficult and complex to re-architect application given a desire not to also have to recode procedural calls or built-in functions being used. Compatibility layers can help alleviate some of these issues and enable porting tools and base migrations to have much greater coverage. This can assist not only where satisfying business needs are become challenging with an RDBMS but also where database consolidation is being considered to reduce database sprawl and take advantage of multi-tenancy.

How can HPE NonStop SQL/MX help?

HPE’s IT organisation has been on a journey to modernise taking into account cloud enablement, data analytics, a digital core, use of DevOps tools, hybrid IT infrastructure (with on-premise deployment), self-service development capabilities through a portal giving database choices, rationalisation of applications and consolidation of database instances (around 25,000 have been observed) using multi-tenancy techniques in many cases. The initiative is around reducing costs, improving execution, simplifying management and redistributing IT budgets from maintenance to innovation. HPE and the PointNext organisation has therefore experience in this modernisation and consolidation journey and can assist customers.

HPE has a “secret weapon” in the NONSTOP SQL/MX relational database management system. This has been added to the portfolio of options made available through a developer’s portal. Using NONSTOP SQL/MX as a database consolidation enabler for HPE’s transactional engine saves licensing and support costs whilst bring over 40 years of proven NonStop reliability to the IT environment.

Why did HPE choose NONSTOP SQL/MX as one of the tools used in the modernisation architecture?

NONSTOP SQL/MX has a single license and support cost model which provides the following standard features:

- Database Services for automated “cloud-like” rapid deployment of instances in minutes and return to the pool once finished with

- Multi-tenancy capabilities allowing multiple database instances to share the same infrastructure but with security and isolation and preventing database sprawl and improving infrastructure efficiency

- Partitioning to allow distribution and balancing of workload with online partition management

- Scalable audit log to handle high velocity of transactions

- Parallel optimisation and parallel execution to provide best query plans and minimise query response times

- MPP (massively parallel processing) architecture with no distributed lock manager required or specialized hardware with around 98.2% linear scaling per node such that resources are added as needed and the new resource provides the same extra capacity as the previous resource with scaling from 2 to over 4000 nodes

- Fault Tolerance meaning backup processes transparently takeover work from a failed resource in at most seconds which is imperceptible from the user. Faults are immediately isolated and contained

- Compatibility with the most common Oracle data types and built in functions as well as PL/SQL procedural language compatibility layer for ease of porting

- Mixed workload to allow query priorities to be set and maintained based upon response time needs

- Integration into Liquibase for automated database change control within a DevOps environment and support for Git and Jenkins with NonStop

- Deployment on traditional infrastructure or virtualised on-premise cloud environment using a reference architecture thus supporting a Hybrid infrastructure

- Access with standard DBA tools such as DB Visualizer

- Designed and proven simplicity for scalable and mission critical environments

Benefits of HPE NonStop SQL/MX

Simplicity of architecture – no complex multiple data replica instances or clustering required to achieve scalability, availability or sharing of data amongst applications as data velocity grows. Design once and the NonStop infrastructure and SQL/MX DBMS grows with your business needs by adding CPUs (nodes) as you need them.

Simple licensing structure – everything is included in one license; parallelism, fault tolerance, MPP scaling, partitioning, database services with auto-provisioning and multi-tenancy plus compatibility features such as PL/MX. This means predicable project costs based purely on capacity needs.

No compromises – MPP scalability and fault tolerance comes as standard and there is no special software or hardware architecting to undertake as HPE do this for customers.

Simple lifecycle management – As well as no trade-offs between availability and scalability there’s no need to reintegrate hardware or software or retune when new versions arise because HPE undertake this for customers. This leaves customers to focus on change to support business innovation not maintenance and plumbing.

Lack of complexity saves costs – one license and one support charge covering multiple database instances using multi-tenancy, no need to redesign, reintegrate and retune the architecture to handle growth, MPP scaling with fault tolerance as standard saves time, staff and Opex costs as well as providing business consistency to meet SLAs. Complexity is the enemy of availability and risk. Trust Networks reported 50% Opex cost savings moving to SQL/MX for their transaction management system. (Takuma Suzuki, Director, Trust Networks Inc.)

Modern – integration into DevOps environments and change management tools such as Liquibase as well as choice of traditional or virtualised on-premise cloud infrastructure deployment.

Be the first to comment