Many years ago now, a company known as Tandem was struggling to stay viable in the new world of client-server. This was in the mid-1990s, and the CEO of Tandem, Roel Pieper, was looking at partnering with Microsoft, the main company driving client-server architecture. Microsoft was struggling to move up within the enterprise space, needing a more robust solution and decided to provide a clustering option, code-named Wolfpack, to Windows. Several vendors contributed to Wolfpack, including Tandem which became Windows clustering software. During this time, Tandem decided to do a major presentation with Microsoft and put together a demo called 2-Ton. This referred to a 2 Terabytes (in those days monstrous) database partitioned over 64 Windows servers. The May 14th press release in 1997 stated: “Microsoft Corp. and Tandem Computers Inc. demonstrated the world’s largest Microsoft® Windows NT® Server network operating system-based system linking 64 Intel Pentium Pro processors in a cluster using Tandem’s ServerNet interconnect technology. The system managed a 2 terabyte database with a 30-billion row table that was based on Dayton-Hudson’s data warehouse, which manages retail outlets such as Target and Mervyn’s stores.” ServerNet had briefly been introduced as a networking card available to put into Windows servers. The following month Tandem was acquired by Compaq Computers Inc. The database software used on the windows cluster was an early version of SQL/MX. It was released briefly for sale on Windows about six months after the Tandem acquisition. It was on the market for about four days before Compaq realized it was suddenly competing with its two biggest partners Microsoft (SQL Server) and Oracle. Compaq pulled the database software from a stunned market. NT magazine had reviewed the product and stated it was five years ahead of SQL Server and at least 18 months ahead of Oracle in terms of scale and reliability. Many supposed that Microsoft or Oracle were in secret negotiations to acquire SQL/MX, but the product, at least the Windows product just faded from memory. Of course SQL/MP had been running on Tandem since the 1980’s and was constantly improving. It was the database associated with Zero Latency Enterprise (ZLE) which was another (at the time) massive demonstration utilizing 110 Terabytes partitioned across a 128 processor Tandem system. Not too long after the ZLE demonstration, Compaq was acquired by HP. HP implemented its own ZLE system, integrating the various SAP instances across both companies in real time, known as iHub. It won numerous awards from the Winter group in 2005. About that time the mayhem caused by Y2K was about over, and Venture Capital firms started investing again in startups. One of the most popular were data analytic companies. Small database appliances that could load data quickly and allow analytics to start against the data almost as soon as it was loaded. Companies like Netezza, Datallegro and many others were suddenly in the market challenging Oracle, Teradata and IBM or at least making inroads into accounts with quick and easy data analysis. All these new solutions were based on a massively parallel, shared nothing architecture. The R&D folks inside NonStop felt they could launch a database appliance product in short order since we already had a massively parallel, shared nothing architecture. They also dusted off SQL/MX as the database they wanted to use on this new appliance. A short time later the system code named Neo was being tested in the NonStop labs. It was designed to compete with the other startup appliances but had the distinct advantage of a tried and true database with a large company (not VC money) behind it. This was about the time Mark Hurd became CEO of HP. Mark had led Teradata and knew about Tandem/NonStop. He quickly learned of the Neo skunkworks project and wanted to know more. Neo was tested against the Walmart database benchmark and fared very well. Mark felt Neo should become an enterprise data warehouse (EDW) not a database appliance, so the direction was changed, money invested and Neoview was released into the market. Mark also wanted HP’s IT to create an EDW using Neoview and Randy Mott the CIO at the time was tasked with migrating a decentralized 700+ data mart environment into a single EDW. His bonus was based on getting this, and many other things accomplished in three years. He was successful. Neoview running a superset of SQL/MX was the EDW for HP for many years, even after Neoview was, let us say, decommissioned. After the tumultuous exit of Mark Hurd from HP, executives, perhaps bitter over Mark’s tenure and leadership killed Neoview in what seemed to be an ill-conceived plan to ‘get back at Hurd.’ Neoview was just coming into its own in terms of performance and stability when the end of sale was announced suddenly. I say ill-conceived, because there was no migration plan for customers. It appeared that HP was simply getting out of the Big Data and analytics business just as it was catching on fire. Randy Mott picked up the Neoview resources since he had an EDW to run and maintain even if it now was EOS (End-Of-Sales). It took many years but the Neoview EDW was eventually replaced by a combination of Vertica and Hadoop. It was bad news for Neoview but the many additions created to support the massive HP EDW have been incorporated back into the NonStop SQL/MX of today and that is very good news for customers taking advantage of this very robust database.

Back in the early days of Neoview, it supported many of the Teradata commands. Today SQL/MX has been expanded to support database compatibility to make it easier to migrate other vendor’s databases onto NonStop. Should you consider such a migration?

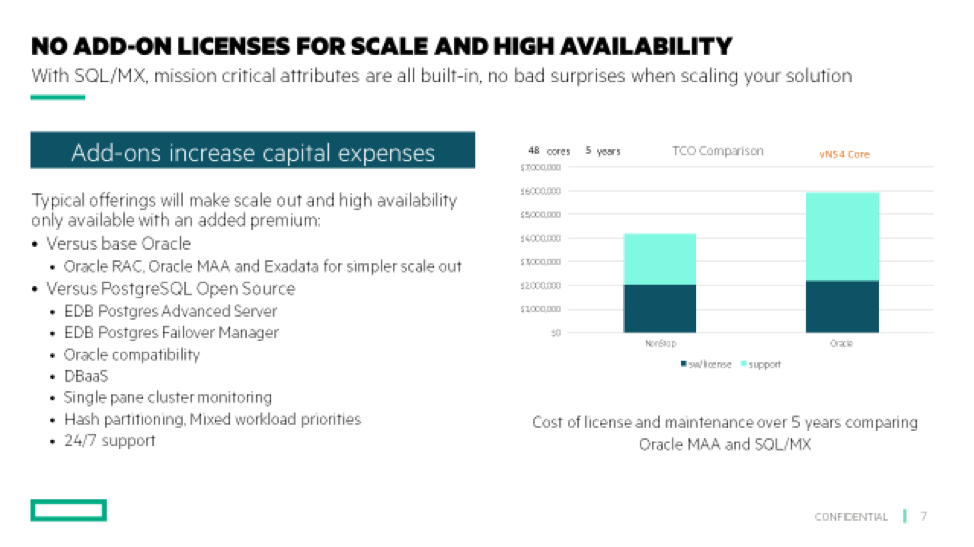

From a capital expense standpoint, would it be less expensive to run the application on NonStop or keep it where it is? In the case of migration to NonStop, you should be thinking of mission-critical, high volume or databases that need to scale to take full advantage of NonStop. If we compare Oracle RAC systems which are expensive, complicated, and require specialized DBA’s the costs are lower, the configurations easier, and the staffing less on NonStop. Similarly comparing Enterprise DB (Postgres) an open-source database the cost, skillset, and setup are still better and less expensive on NonStop.

Figure 1

Many open-source databases claim to have mission critical capabilities but the question is at what price and what effort? With many vendors and open-source databases, you often need to go back to the drawing board before you move to mission-critical availability and from scale up to scale out. Those changes often need very specialized DBAs who can rarely satisfy the complex needs of different applications. Open-source databases, while the initial cost may appear lower, costs will quickly rise as you design and assemble the final solution making sure to avoid single points of failure. The inability to meet performance goals will often be rectified with specialized hardware as with Exadata or for NoSQL, queries may be added after the fact requiring MapR developers or adding a non ANSI SQL such as Cassandra or other NoSQL DBs moving from ACID (Atomicity, Consistency, Isolation, Durability) to BASE (Basically Available, Soft state, Eventual consistency) capabilities. Adding on availability and scale after the fact is costly and adds to complexity – the enemy of availability.

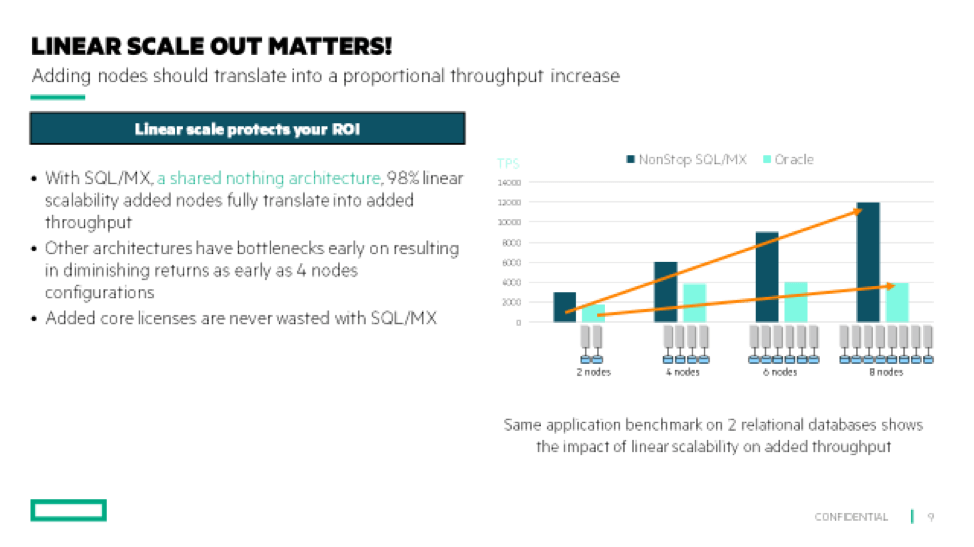

Additionally, if the database grows in a scale out fashion, there is a predicable cost for growth and a linear expansion to the NonStop system and the database. NonStop SQL/MX uses local lock managers, part of the shared-nothing architecture, which provides for this linear scale. Oracle with its central lock manager has created a bottleneck to scale. As seen in the charts, adding nodes to RAC does not increase the performance like adding nodes to NonStop. If you are planning to grow, the best performance increase with the best predicable costs are with NonStop.

Figure 2

When I was working on the Neoview systems I happened to be working on an internal project with an HP Oracle specialist who had successfully competed against Teradata. Since he and I both worked for HP, he was telling me about the benchmarks he had conducted for HP using Oracle. “Teradata, like Neoview (NonStop) comes pretty well configured out of the box. When Teradata would set up for a benchmark they would almost always be faster than Oracle, but we’d spend 6 to 8 weeks tuning Oracle, and we’d usually be able to beat the Teradata performance. With a known query load we can tune Oracle really well. The problem, in real life, is that rarely do the query loads remain static. When they change, you really need to retune Oracle to get good performance. If your query profile changes a lot a system like Neoview (NonStop) is much easier to tune and delivers overall better performance.” That story also demonstrates the difference in staffing requirements. As another database professional said “Oracle has a lot of bells, whistles, and buttons to push. You can eventually tune a system really well, although tuning is sort of continuous. On NonStop there’s just not that many things to muck around with.” In many studies the staff required for NonStop is always less than the staff required for competitive offerings.

As most know NonStop is rated at the IDC availability level 4 tier. That is the highest tier for availability and states that when a failure occurs on an AL4 system, the users are unaware of the failure. NonStop is not the only vendor to have this rating but there is a difference. For IBM and Oracle AL4 systems IDC indicates specific configurations that are considered AL4. NonStop is the only major vendor that provides AL4 out of the box. There are no special configurations or hardware to achieve this. Also, in terms of actual availability, NonStop has higher availability in the industry. According to Gartner cost of IT downtime is $5600/min but up to $50,000/min for Fortune 500 companies (*). According to IDC Financial Services and TELCO are the industries where downtime is most costly (**). While everyone claims to have high availability features, a very few offering are able to achieve 99,999% availability (5 minutes down time per year). In fact the majority of platforms using high availability features help to achieve at best 99,99% availability , therefore 50 minutes of downtime per year (***). So there is a delta of 45 minutes between the 99.99% and 99.999% of availability. If we multiply the 45 minutes times the $50,000 per minute of downtime cost it is a cost of over $2,000,000. If you know your downtime costs you can determine your own numbers.

NonStop SQL/MX has a long history. From the real-time operational data store (ZLE) days to the Enterprise Data Warehouse (Neoview) background SQL/MX has proven it is both available and massively scalable. When we make accurate cost comparisons NonStop is generally less expensive than its competitors. It is much simpler to setup and run letting you get to production faster all while requiring less staffing. Besides all that it has a proven track record of being the most available system and database in the market. New features, open-source capabilities (DevOps) and API support are being added and enhanced with every release. Please consider NonStop SQL/MX when you need an available, reliable and scalable database for your next application.

Be the first to comment