Virtual NonStop

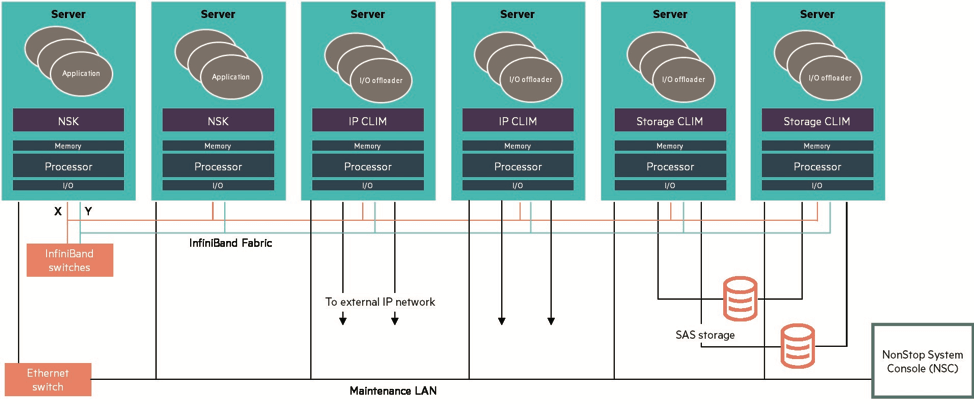

There has been a lot more interest in virtual NonStop since the support of the VMware Hypervisor. I am aware of many proof of concepts testing virtual NonStop. There still seems to be some confusion around what virtual NonStop means exactly. Many jump to the conclusion that NonStop can now be run in a public cloud such as AWS or Azure. This is not currently possible since, to make virtual NonStop, actually ‘NonStop’ requires some fairly stringent configurations. These things are not readily available to configure in a public cloud. That depends on your definition of cloud; but in general, yes, if you mean a private cloud… One where the user has some control over the environment. One where the user has some control over the environment. Virtual NonStop, for starters, needs a high-speed pair of switches to interconnect our virtual machines (VM). These switches should support 40-100GbE and we need two separate, independent switches that operate as the X and Y fabric on our platform Hybrid systems (NS7 & NS3). On the platform systems a NonStop will consist of at least a pair of x86 Blades, at least 2 DL380’s for the Storage CLIMs and another pair of DL380’s for the IP CLIM. Additionally, there will be a Windows system running the NonStop Console.

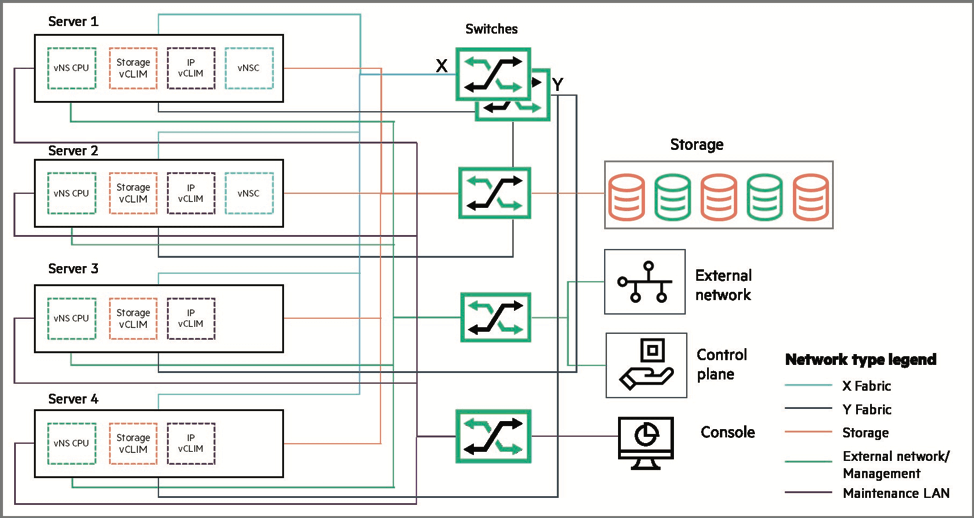

To create a virtual NonStop, we still need all the components, but they have become virtual machines (VM). So there is a VM that runs the NonStop operating system a vCPU and we will need to have at least two vCPU VM’s for it to be NonStop. Also those two VM’s may not be run in the same physical server. I will load VMware ESXi on a physical server let’s call it Phy1. I will load ESXi on a second server, called Phy2. Running ESXi allows VM to share that server. So I would run vCPU0 in Phy1 and vCPU1 in Phy2. Phy1 and Phy2 must both have a high speed (40GbE) 2 port NIC where port 1 is connected to one of the high speed switches (fabric X) and the other port is connected to the other switch (fabric Y). Now vCPU0 and vCPU1 can connect with each other and exchange “I’m alive” messages. In Phy1 I would also load a storage VM CLIM, vSCLIM1 and an IP VM CLIM vIPCLIM1 and on Phy2 I would do the same starting vSCLIM2 and vIPCLIM2. The CPU’s, and CLIM’s communicate over the high speed switches just like them do over the InfiniBand Fabric on the NonStop Platform systems. In either Phy1 or Phy2, I would start a Windows VM and then load the NSC software onto it creating the NonStop Console which would also communicate with all the other VM’s (CPUs, IP CLIMs and Storage CLIMs) over the high speed switches. It is documented in the ‘Hardware Architecture Guide for HPE Virtualized NonStop on VMware’ how to count up the required cores, memory and storage that will be required because unlike other VM’s NonStop does not like to share resources.

So a virtual NonStop system requires a specific number of cores (not threads!), a specific amount of unshared memory and dedicated datastores. So creating a virtual NonStop system requires very specific and defined resources, physical separation yet near proximity of the separate servers because of the switches. That’s a lot of requirements for a public cloud but not so much for a private cloud. The virtual NonStop does provide flexibility in terms of the hardware for the system. Various servers, networking switches and storage systems can be supported. But of course, NonStop may not have tested the various combinations of server, network and storage that might be configured. If you are currently not on the x86 NonStop platform I suggest getting to NonStop X by first migrating to the HPE NonStop hybrid platforms, currently NS7 and NS3. Get comfortable with the L-series OS and enjoy the enhanced performance and better open-source integration (perhaps explore DevOps). If your company wants to standardize hardware and wants to virtualize, then convert your Dev/Test systems to vNonStop. They will run the very same L-series OS as your platform X systems. Once you are comfortable with vNonStop (how to configure it and run it under VMware), then convert your Prod and DR. This provides the least risk and greatest benefit for NonStop users.

Be the first to comment