As AI matures from pilot projects to production systems, data leaders are asking a hard question: why is some of their most critical data—from the systems that run the business—still out of reach?

In truth, it’s often a byproduct of how massively parallel processing (MPP) systems are intentionally designed. HPE Nonstop, for instance, is purpose-built for high-throughput transaction processing and fault tolerance, which means it operates with a degree of isolation that protects its integrity.

But as organizations expand their data strategies to adopt cloud platforms, machine learning models, and near-instant analytics pipelines, they find themselves needing visibility into the data that’s safely managed and guarded in Nonstop.

Use Striim to Leverage Real-Time Data on HPE solutions

Historically, to meet these new requirements, many teams have defaulted to heavyweight ETL: extract, land, transform, load. While this model can get the job done, in 24/7 environments, it’s inherently brittle, slow, and puts unnecessary stress on infrastructure, resulting in data delivery that is out of sync by the time it’s consumed.

Take, for example, a large payments processor that settles transactions in under a second. Each event touches multiple systems—authorization, fraud scoring, ledger updates—and is stored in Nonstop databases designed to ensure nothing is lost or duplicated. Extracting that data for analytics means either interrupting the workflow or implementing intrusive, high-overhead jobs that replay transaction logs or snapshot entire tables. Both options risk latency and break fidelity—neither of which is acceptable.

Striim offers a fundamentally different approach. Instead of treating Nonstop data as something to be retrieved and reprocessed later, Striim streams it in real time, as events unfold, without disruption or delays.

It’s a natural fit with HPE Nonstop, enabling low-latency data pipelines to be built on change data capture (CDC) that align with the fault-tolerant design of Nonstop. This extends the value of existing Nonstop systems, allowing customers to feed cloud analytics, machine learning pipelines, and operational dashboards with current, trusted data from warehouses like Google BigQuery, Microsoft Fabric, and Snowflake, among others.

Today, Striim is readily available directly through the HPE Nonstop price book, simplifying procurement and support for teams that are already managing complex infrastructure. It’s a logical evolution, and it removes roadblocks for customers looking to modernize without reinventing stable systems.

Striim Unlocks Nonstop Data for Enterprise Cloud

While organizations once believed all data would live in a single enterprise system, experience has proven otherwise. Critical data, especially, doesn’t live in one place, and insights don’t emerge from lagging reports.

With Striim, Nonstop users can stream data from Nonstop SQL/MX, SQL/MP, or Enscribe databases into a variety of targets like Oracle, PostgreSQL, and MySQL. Striim supports high-throughput, zero-downtime environments and makes operational data immediately available across hybrid or multi-cloud architectures.

This way, HPE Nonstop remains the enterprise engine for core banking, payments, telecom billing, and other high-throughput use cases. In these cases, systems can’t go down or be re-platformed easily, but they can be extended.

By using Striim, enterprises can:

- Feed Nonstop data into real-time dashboards for operations and risk teams

- Continuously populate data warehouses and lakes (e.g., Google BigQuery, Microsoft Azure, Snowflake, Databricks, etc) for model training

- Detect anomalies within milliseconds across transactional and behavioral data

- Enable digital twins of core systems for simulation and testing

This approach, as opposed to legacy ETL, encourages building on top of what works, bringing Nonstop into an organization’s broader data fabric. For example, a card issuer could use Striim to continuously stream approved and declined transaction data from HPE Nonstop SQL/MX into Google BigQuery. Each transaction record is enriched mid-stream with customer metadata—like geographic location, account status, and velocity indicators—from an external CRM system.

Once in BigQuery, a scheduled query runs every 60 seconds to identify patterns such as repeated high-value transactions, mismatched geolocations, or new merchant IDs. When thresholds are met, the query result triggers a Cloud Function that sends an alert to the fraud operations dashboard and logs the incident for downstream review—closing the loop within two minutes of the initial swipe.

Now, as a deeply integrated HPE Partner Ready program partner, Striim empowers Nonstop customers to continuously modernize their legacy systems without ripping, replacing, and rewriting everything from scratch.

Accelerating the Data Journey to AI

With real-time integration established, Striim and HPE are helping enterprises advance their data maturity step by step. From foundational ingestion to AI-powered decision-making, each phase introduces new technical requirements. Striim complements HPE Nonstop by providing the infrastructure to unlock and operationalize data at every stage along the journey to AI, without compromising the transactional consistency these systems are built to deliver:

- Cloud Migration & Adoption

Striim uses log-based change data capture (CDC) to non-invasively extract transactions from HPE Nonstop databases—SQL/MP, SQL/MX, or Enscribe—and stream them to cloud services like Microsoft Fabric or Snowflake. This allows organizations to start leveraging cloud infrastructure immediately, without pausing, snapshotting, or duplicating data. - Data & Platform Modernization

Striim performs in-flight transformations on streaming data, so Nonstop transactions can be enriched, filtered, and mapped to downstream schemas before they land. Teams can apply business rules using SQL or Java to reshape legacy records and make them compatible with modern data models without modifying source systems. - Upstream Analytics

Once in the cloud, Nonstop data becomes accessible to real-time BI platforms. For example, a telecom provider streams call and billing records from HPE Nonstop into Microsoft Fabric, where data is queried every 15 seconds to drive dashboards showing live usage, performance anomalies, and plan thresholds. Teams use this for proactive alerts and real-time customer engagement. - AI Enablement

With consistent, real-time data flowing from Nonstop into platforms like Snowflake or Databricks, data teams can train and retrain models at production speed. Because Striim ensures schema consistency and handles in-stream transformations, data teams don’t need to build preprocessing pipelines. Features, labels, and scoring inputs were all maintained as part of the live stream, cutting days off model iteration and deployment cycles.

For HPE customers in highly regulated industries, Striim offers both capability and assurance. With certifications for PCI-DSS 4.0, HIPAA, SOC 2, and more, the solution easily supports environments where precision, traceability, and data control are non-negotiable.

Other key Striim features include:

| Low-code GUI | HPE customers can quickly set up and manage data streams using Striim’s low-code user interface. |

| Deploy on-premises or in the cloud | Striim is available to deploy either on-premises or in the cloud. |

| Zero-downtime data streaming | Use Striim’s low-code interface to quickly migrate and continuously replicate data between a variety of sources and targets. |

| E1P and Recoverability | Striim supports exactly-once-processing guarantees for specific source/target combinations and includes out-of-the-box recoverability, ensuring zero data loss. |

| Infinitely Scalable | Striim can scale horizontally or vertically to handle the toughest enterprise workloads. |

| Automation | Automation Striim data pipeline applications can be developed with text-based files, integrated into CI/CD pipelines, and deployed via a REST API. |

| Monitoring Suite | Striim includes UI and console-based monitoring tools to ensure data pipelines are running smoothly. |

| Striim AI | In the 5th generation of Striim, Striim released new AI features to automatically detect and protect sensitive data. |

Striim’s Real-Time AI

Striim recently unveiled three new tools that enable real-time data management and protection for enterprise-level data: Sherlock AI, Sentinel AI, and CoPilot.

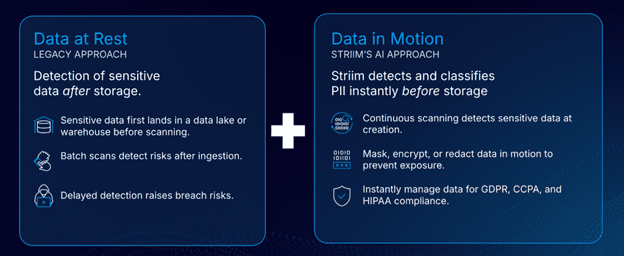

Sherlock AI brings intelligence to the start of the data pipeline by scanning source systems for sensitive data before any movement occurs. Powered by trained AI models, Sherlock can automatically detect patterns like credit card numbers, social security numbers, and other regulated data types—helping organizations make informed decisions about what should or shouldn’t leave the source.

Sherlock is seamlessly integrated into Striim’s low-code interface, enabling users to quickly identify the types of sensitive data and the exact columns where they reside. This early visibility supports better governance, reduces downstream risk, and keeps compliance considerations front and center from the beginning.

Sentinel AI acts as a real-time safeguard for sensitive data as it moves through Striim’s pipelines. Designed to detect Personally Identifiable Information (PII) such as email addresses, social security numbers, and other regulated data types, Sentinel automatically masks or encrypts this information before it ever reaches its destination. By embedding protection directly into the data stream, Sentinel gives organizations precise control over how sensitive data is handled—ensuring compliance, minimizing exposure, and reducing the risk of downstream leaks or misuse. It’s proactive data governance, built into the flow of operations.

Sentinel also offers auditing and analytics of sensitive data, giving Striim customers the ability to track the type and count of sensitive data occurrences in the source database. This enables enterprises to identify potential data quality issues and track occurrences of sensitive data in the source database. For example, Sentinel can detect whether a social security number is contained in the phone_number field and mask that data before it is moved. It also analyzes the number of occurrences of social security numbers in the source records.

Striim’s Sentinel and Sherlock agents are powerful new tools that can enhance customers’ ability to integrate their data while meeting data governance and compliance standards.

Complementing these capabilities is Striim CoPilot—a generative AI assistant embedded directly into the Striim UI. CoPilot supports users as they design and refine data pipelines, offering real-time guidance that simplifies navigation and accelerates development across the enterprise. All of this happens within the same interface, making advanced data operations more accessible and intuitive.

From System of Record to System of Reasoning

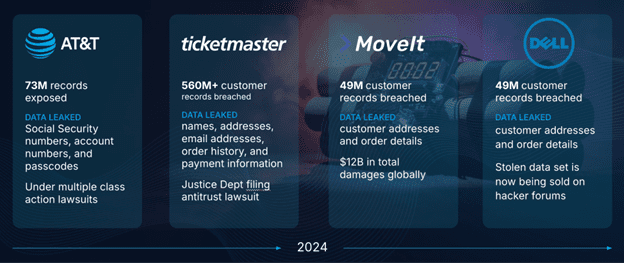

Some challenges move faster than legacy systems can track. Financial fraud is one such challenge, where subtle patterns emerge quickly, and the cost of hesitation grows with every transaction.

According to the FTC, consumer fraud impacted 2.6 million people in 2024, resulting in $12.5 billion in losses in 2024—a 25% increase from the previous year. Of that total, bank transfers and payment fraud accounted for $2.09 billion, making it the most costly method of fraud reported.

As fraud happens more quickly and sophisticatedly, traditional detection methods often fall behind. Catching it early requires a system that can identify subtle anomalies the instant they surface.

For decades, systems like HPE Nonstop earned their rightful place by being dependable. They recorded transactions accurately, processed them quickly, and stayed online when everything else didn’t. But AI shifts the baseline. Instead of simply asking, “Where is the data?”, it asks, “What does the system understand about what just happened?”

Striim unlocks the ability to reason over data as it moves, embedding Nonstop systems within that reasoning loop. Rather than simple passive repositories, they become active participants in AI-driven workflows.

Imagine your transaction engine surfacing not just that a charge occurred, but that it’s 3x higher than a customer’s average, outside their normal location, and similar to a flagged pattern from two months ago. Now, imagine that context flowing into a fraud model before the transaction clears. That’s intelligence at the edge of the business.

That’s what Striim and HPE Nonstop jointly deliver. Not just pipelines, or observability, but the ability for your most trusted systems to think in real time—at the speed your business now requires. Together, we’re shifting the question from, “Can I access my data in real time?” to “Are my systems prepared to think with me?”

Sources:

FTC, March 2025, https://www.ftc.gov/system/files/ftc_gov/pdf/csn-annual-data-book-2024.pdf

Be the first to comment