For decades, Nonstop systems have represented the pinnacle of reliability — built on the idea that no single point of failure should ever bring down a mission-critical workload. But as AI begins to embed itself into everything from fraud detection to automated operations, a harder question is emerging:

When the decisions themselves are made by algorithms, what does “Nonstop” reliability actually mean?

The illusion of trust in an AI-driven world

AI is making its way into mission-critical operations under the banner of

“efficiency” and “automation.” It’s being trained to detect anomalies, generate code, predict failures, and even recommend access decisions. But in environments where downtime isn’t an option, misplaced trust can be just as dangerous as a missed patch.

Traditional Nonstop reliability was built around redundancy — hardware, networks, and storage. But trust in AI isn’t redundant; it’s opaque. You can’t fail over a model that learned the wrong thing. You can’t mirror an algorithm’s bias across two data centers and call it resilience.

The industry is learning that while AI can supercharge operations, it also introduces a new class of risk – dependency on data and models that can’t easily be audited, verified, or explained.

The perimeter is gone — and control goes with it

Nonstop systems used to live behind walls, isolated, controlled, and predictable. That model no longer exists.

Modern Nonstop environments connect through APIs, analytics platforms, identity systems, and cloud telemetry. Data flows in and out. Partners access shared services. Threat surfaces expand.

In this new reality, the perimeter isn’t where your system ends — it’s where your trust begins.

Every external integration introduces a new supply chain risk. Every shared dataset or AI model opens another potential attack vector. And every automation decision made by AI extends trust beyond your control.

This shift means that resilience can no longer be measured by uptime alone. True resilience now includes integrity of the models, code, and data that drives automated decisions.

The new supply chain: models, datasets, and dependencies

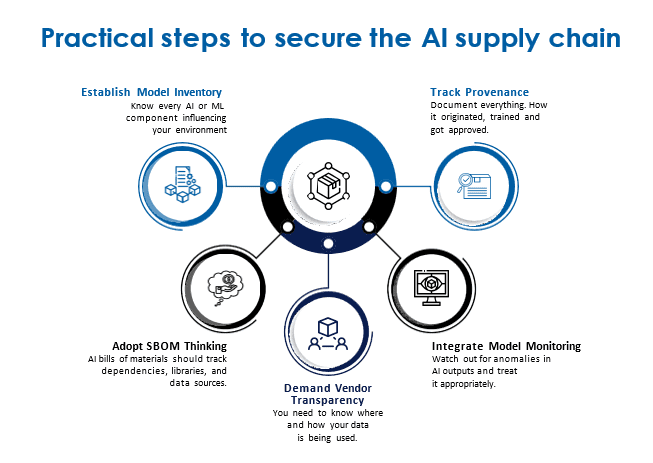

We used to think of “supply chain” in terms of vendors, firmware, or open-source code. But the AI era adds new components that few organizations are securing:

▶Model provenance – Do you know where your AI models came from? Who trained them, and on what data?

▶Training data exposure – Was sensitive operational data used to train a model that’s now being reused externally?

▶Model drift and poisoning – Could a small manipulation in training data lead to large downstream errors in production?

For Nonstop systems, these aren’t hypothetical risks. They’re operational ones. A misinformed predictive model could delay a transaction queue, misclassify a legiti-mate process as malicious, or flood operations teams with false positives.

And unlike code bugs, model behavior isn’t deterministic — you can’t fix it with a patch. That’s why AI governance and real-time monitoring must evolve into the next form of Nonstop resilience.

Redefining “Nonstop” in the age of intelligent automation

The Nonstop community has always been at the forefront of mission assurance— redundancy, failover, data integrity, and auditability. Those principles still hold. But they need to expand.

AI-driven automation should be treated like any other privileged component in a critical system:

▶Authenticate it. Every model and API should have a verifiable identity.

▶Authorize it. Apply least-privilege principles to what AI agents can do or trigger.

▶Monitor it. Audit every AI-driven action as you would a system administrator’s command.

▶Validate it. Continuously test model outputs against expected outcomes before they impact production.

In other words, we must move from assuming AI will behave correctly to proving that it does.

These steps might sound procedural — and they are. But procedural discipline is what has always set Nonstop environments apart.

The path forward

AI isn’t the enemy of reliability; complacency is.

We can’t assume the same controls that made Nonstop systems resilient in the past will automatically secure the intelligence we’re adding to today.

The mission hasn’t changed — it’s still about uninterrupted trust, not just uninterrupted uptime.

The systems we build, the partners we choose, and the AI we adopt all feed into that trust equation.

And if AI is going to make operational decisions inside mission-critical systems, it must earn our trust, every single time.

That’s exactly why companies across the globe, including many of the world’s largest financial institutions, retailers, and manufacturers, have trusted XYPRO and HPE for decades to protect the integrity of their Nonstop environments. Since 1983, we’ve helped organizations evolve their security without ever compromising reliability, continuously adapting to new threats, new technologies, and now, the new realities of AI-driven automation.

Experience has taught us that resilience isn’t built overnight; it’s engineered, refined, and earned — one trusted system at a time.

Be the first to comment