The gap between cutting-edge AI technologies and enterprise mission-critical systems presents a significant challenge for organisations seeking to leverage both. Infrasoft’s latest addition to their uLinga suite, uLinga Nexus, addresses this challenge by implementing Model Context Protocol (MCP) server capabilities that enable AI applications to interact seamlessly with HPE Nonstop systems, and their applications and data.

The Integration Challenge

For decades, Nonstop systems have powered the world’s most critical transaction processing workloads in banking, telecommunications, and other industries where downtime simply isn’t an option. However, integrating these systems with modern AI applications has traditionally required extensive custom development, creating barriers to innovation.

The emergence of the Model Context Protocol provides a standardized approach to AI integration, and Infrasoft has recognized its potential to transform how organisations can leverage AI capabilities alongside their Nonstop infrastructure.

Architecture That Makes Sense

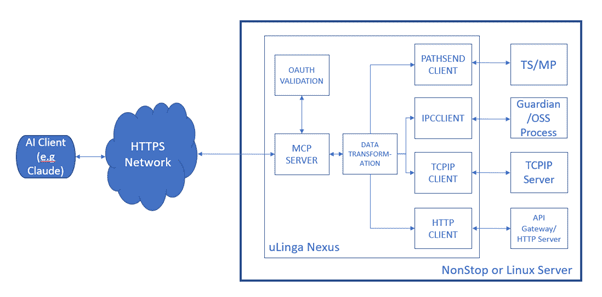

At its core, uLinga Nexus implements a four-layer architecture designed to bridge the gap between modern AI applications and Nonstop backends:

The MCP Protocol Layer handles the standardized interface that AI applications expect, implementing tool registration, discovery, and execution endpoints over secure HTTPS with TLS support up to and including version 1.3.

The Authentication Layer ensures enterprise-grade security through OAuth 2.0 implementation with JWT token validation, scope-based authorization, and integration with existing identity providers. This means organisations don’t need to reinvent their security infrastructure.

The Transformation Layer performs the critical work of converting between JSON payloads that AI applications speak and the DDL-defined binary structures that Nonstop systems understand. This bidirectional conversion also handles ISO8583 messages, making the solution particularly valuable for financial services organisations.

Finally, the Backend Integration Layer manages connections to Pathway servers, Guardian and OSS processes, and both TCP/IP and HTTP endpoints, providing flexibility in how Nonstop applications are accessed.

Real-World Request Processing

When an AI application needs to interact with a Nonstop system through uLinga Nexus, the request follows a carefully orchestrated flow. First, the client authenticates using an OAuth 2.0 JWT bearer token. uLinga Nexus validates this token and verifies that the requested operation falls within the authorised scopes.

Next, JSON parameters are transformed into the binary format the backend requires – whether that’s DDL-formatted structures or ISO8583 messages. The request is then routed to the appropriate backend based on the tool’s configuration, whether that’s a Pathway server, a Guardian process, or a TCP/IP or HTTP endpoint.

When the response returns, it undergoes the reverse transformation from binary to JSON before being formatted as an MCP response and returned to the AI application. Throughout this process, comprehensive tracing captures every detail for monitoring and troubleshooting.

Data Transformation Excellence

One of the most technically impressive aspects of uLinga Nexus is its data transformation engine. Converting between JSON’s flexible structure and Nonstop’s rigidly defined binary formats requires handling numerous edge cases and type conversions.

The engine maps JSON types to their DDL equivalents, converts JSON numbers to appropriate precision and scale for FIXED and FLOAT types, handles arrays by mapping them to DDL OCCURS clauses with bounds checking, and manages nested structures by recursively processing JSON objects into DDL STRUCT definitions. Even null handling is configurable, allowing JSON null values to map to DDL defaults or predefined values as needed.

Recognising that configuring data transformations can be complex, Infrasoft has developed several tools to automate the process, including command line utilities to generate data transformation configurations from DDL source and JSON schemas. The suite includes a user-friendly GUI that allows customers to interact with data transformation attributes through a modern web browser interface. This approach eliminates the need to manually edit configuration files or work directly with low-level transformation rules. Instead, administrators can manipulate transformation mappings using familiar web-based technologies, dramatically reducing the time and expertise required to set up and maintain AI integrations with Nonstop systems.

Flexible Backend Integration

uLinga Nexus doesn’t force organisations into a single integration pattern. Instead, it supports multiple backend connection types to accommodate different architectural approaches.

For Pathway server integration, tools can invoke serverclasses directly using the serverclass name. Guardian and OSS process integration enables direct IPC communication via process names. TCP/IP integration supports both persistent connections and connection-per-request modes. And for systems that have already adopted HTTP-based APIs, the HTTP server integration capability provides a natural connection point.

In all cases, uLinga Nexus handles connection pooling, retry logic, and error conditions automatically, reducing the complexity that would otherwise fall to individual application developers.

Security Throughout

Security permeates every layer of the uLinga Nexus architecture. Transport security is addressed via TLS 1.2/1.3 support, ensuring communications are protected in transit. JWT tokens are validated on every request, with signature verification using either HMAC symmetric keys or public keys from the OAuth provider’s JWKS endpoint.

The scope-based access control system ensures that AI applications can only invoke the tools they’ve been authorised to use, and those tools can only access their configured backends. Cross-tool access to backend resources is explicitly prohibited. Additionally, DDL transformation includes bounds checking for arrays and string lengths.

Operational Visibility

For operations teams, uLinga Nexus generates comprehensive trace records for every MCP request. These records capture the timestamp, client identity extracted from the OAuth token, the tool being invoked, the backend target, response status, processing duration, and detailed error information when requests fail.

This level of visibility makes it possible to monitor AI integration patterns, identify performance bottlenecks, troubleshoot failures, and maintain audit trails for compliance purposes.

Getting Started

uLinga Nexus ships with sample MCP configurations, including MCPSERVER, MCPTOOL, DATATRANSFORMER, and AUTHPROFILE resources. These can be customized using either the WebCon browser-based interface or the ConsoleCon command-line interface, allowing administrators to work with the tools they prefer.

Performance That Scales

Built on uLinga’s existing data transformation capabilities, the MCP implementation leverages asynchronous I/O throughout the stack. This design enables efficient handling of multiple simultaneous requests without blocking. The use of worker processes and threads further improves achievable throughput, making the solution suitable for production workloads.

The Bigger Picture

The introduction of MCP support in uLinga Nexus represents more than just another integration option. It signals a fundamental shift in how organisations can think about their Nonstop systems in the age of AI.

Rather than viewing these mission-critical systems as legacy infrastructure that must be isolated from modern innovations, or bridged via interim servers and applications, uLinga Nexus enables them to participate directly in AI-powered workflows. An AI agent analysing transaction patterns can now query Nonstop systems for real-time data. A conversational AI application can trigger processes on Nonstop systems as naturally as it might call any other API.

This capability opens new possibilities for organisations that have invested heavily in Nonstop infrastructure. They can now explore AI-powered fraud detection that accesses real-time transaction data, conversational interfaces for complex business processes, intelligent automation that spans modern and traditional systems, and AI-assisted decision support that draws on decades of transactional history stored in Nonstop databases.

Standards-Based Approach

By implementing the Model Context Protocol rather than creating yet another proprietary integration approach, Infrasoft has positioned uLinga Nexus to work with the growing ecosystem of MCP-compatible AI applications and tools. As the MCP standard evolves, organisations can benefit from a broader range of integration options without being locked into a single vendor’s vision of how AI integration should work.

Looking Forward

As AI continues its rapid evolution, the gap between what’s possible with modern AI technologies and what’s practical in enterprise environments will either widen or narrow based on the integration tools available. Solutions like uLinga Nexus help ensure that organisations running Nonstop systems can participate fully in the AI revolution without sacrificing the reliability, security, and performance that made them choose Nonstop in the first place.

For organisations contemplating AI initiatives that need to interact with Nonstop systems, uLinga Nexus provides a path forward. The combination of standards-based protocols, comprehensive security, flexible backend integration, and enterprise-grade monitoring creates a foundation for innovation that respects both the power of AI and the critical nature of Nonstop workloads.

For more information about uLinga Nexus and its MCP Server capabilities, contact Infrasoft at info@infrasoft.com.au.

Be the first to comment