HPE NonStop servers remain the backbone of mission-critical systems across different industries. The reality is clear—the HPE NonStop platform continues to offer unparalleled benefits including the best total cost of ownership (TCO) in the industry. However, as veteran NonStop developers and operators approach retirement, organizations face the critical challenge of preserving decades of accumulated knowledge and business logic embedded within their systems.

The Growing Knowledge Crisis

When these SME developers leave, they take with them years of understanding about how the business logic actually works and why certain decisions were made. The problem gets worse because most organizations don’t have good documentation. That leaves the current teams to work with old or incomplete information that makes support difficult and changing programs risky. This, in turn, leads to longer support times, larger teams, and higher cost. More importantly, the lack of understanding of the existing business makes it challenging to leverage new tools to modernize the applications.

The Documentation Dilemma: Why Manual Efforts Fall Short

For decades, organizations have relied on manual documentation to capture system logic—whether through developer notes, external consultants, or ad hoc knowledge repositories. In theory, this should provide continuity. In practice, it rarely delivers.

For decades, organizations have relied on manual documentation to capture system logic—whether through developer notes, external consultants, or ad hoc knowledge repositories. In theory, this should provide continuity. In practice, it rarely delivers.

Time-Consuming and Costly: Documenting even a single COBOL or TAL program can take weeks. Scaling this effort across an enterprise portfolio is rarely feasible.

Expert-Dependent: The few people who can produce high-quality documentation are often the busiest—or retiring. Their time is limited, and replacements lack the necessary knowledge.

Inconsistent Results: Manual documentation often results in disconnected files and inconsistent descriptions due to incomplete context, especially when the task is performed by a team of members with different experience and expertise.

Quickly Outdated: Applications with new business logic evolve, but documentation lags behind and can become obsolete very quickly. The reality is that most documentation projectsare not supported on a continuous basis because of cost and effort.

These limitations have created a persistent gap: organizations understand the value of documentation but are unable to sustain the effort at scale. It’s clear that new approaches are needed.

The Generative AI Revolution

The emergence of Generative AI (or GenAI) offers a new potential solution to this challenge. It represents a breakthrough in how we approach system analysis and knowledge extraction. Generative AI possesses the ability to understand context, infer relationships, and comprehend the underlying business logic in the programs.

If harnessed properly, this technology can assist in identifying architectural patterns, business rules, and design decisions that humans will take months to document manually. GenAI can produce human-readable explanations that bridge the gap between technical implementation and business intent at an unmatched speed.

Here is a quick review of some common Generative AI terms.

- Generative AI: AI technology that creates new content (text, code explanations, and diagrams) by understanding patterns in data using natural language.

- Large Language Models (LLMs): A deep learning AI model, pretrained on massive amounts of text data. LLMs can understand, summarize, translate, predict, and generate human-like text and other content.

- Prompt Engineering: Crafting specific instructions to get better AI results. It is sort of like “programming instructions” for GenAI.

- Context Window: The amount of text a LLM can process at once, measured in tokens.

- Hallucination: When AI generates output that is factually incorrect, nonsensical, or fabricated, despite appearing plausible.

The GenAI Hype: Expectations versus Reality

However, the explosive popularity of Generative AI has created unrealistic expectations in many organizations. Upper management, impressed by ChatGPT’s ability to write emails and create presentations, often believe that AI can solve any problem instantly. This leads to oversimplified directives like “Just use ChatGPT to document our systems” or “AI will handle all our application development needs.” While Generative AI is indeed powerful, this one-size-fits-all mentality overlooks the complexity and specialized requirements of analyzing application codes from decades of customized NonStop development.

However, the explosive popularity of Generative AI has created unrealistic expectations in many organizations. Upper management, impressed by ChatGPT’s ability to write emails and create presentations, often believe that AI can solve any problem instantly. This leads to oversimplified directives like “Just use ChatGPT to document our systems” or “AI will handle all our application development needs.” While Generative AI is indeed powerful, this one-size-fits-all mentality overlooks the complexity and specialized requirements of analyzing application codes from decades of customized NonStop development.

Many organizations may attempt to document their NonStop systems simply by copying code into ChatGPT or similar consumer AI tools. While this offers some instant feedback on code snippets, it creates many potential pitfalls for codebase documentation purposes:

LLM Limitation: ChatGPT and other general-purpose models can’t do efficient code analysis on their own. They lack the specialized capabilities needed to understand complex system architectures, data dependencies, and business logic relationships that are critical for comprehensive documentation. Most importantly, each model has its own strengths and weaknesses. There is no “one size fits all.”

Context Size Limitation: A typical COBOL program with includes and copybooks can easily exceed the LLM’s input limits. That forces LLM to fragment its analysis and lose the big picture of how components work together. The resulting output would be an incomplete analysis of the codebase.

Hallucination: General AI models trained on diverse programming languages often confuse NonStop-specific constructs with similar patterns from other platforms. For example, NonStop COBOL code often gets misinterpreted as IBM code, leading to fundamentally incorrect documentation.

Missing NonStop Expertise: AI tools are not trained on all the NonStop internals like process pairs, pathway, or Guardian library, file structures. Without this domain knowledge, the AI generates generic explanations that miss the most important aspects of your system’s behavior.

Recognizing these critical gaps in consumer AI tools, TIC Software set out to build a purpose-driven solution that addresses the unique requirements of NonStop code analysis.

Purpose Driven Solution -TIC Navigator

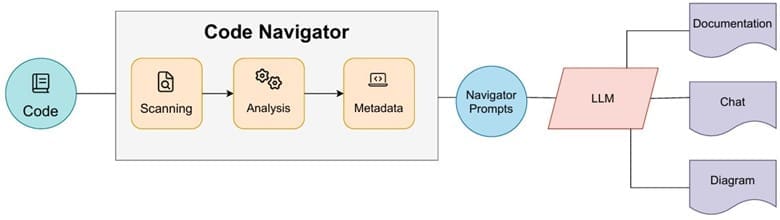

Our team at TIC Software has focused on designing a structured approach to applying Generative AI in the NonStop environment. Rather than just relying on out-of-the-box LLM, we explored building a solutionthat could complement GenAI with expert NonStop knowledge.

![]()

Our work has culminated in Navigator, a solution engineered specifically for HPE NonStop environments. Navigator integrates GenAI with these features:

Navigator begins by scanning and analyzing the code to map out dependencies and create metadata to facilitate LLM processing. It’s preprocessing engine segments and structures large programs intelligently, avoiding the context size limitations that plague general-purpose models.

Our prompts help AI interpret NonStop specific components, such as TMF transactions, process pairs, and other NonStop-native elements—leading to more accurate, meaningful insights and minimizing the risk of hallucinations.

Navigator is not tied to a single LLM. It uses curated combinations of models based on task type and system characteristics, addressing the “wrong model” issue that limits off-the-shelf approaches. Optionally, it can be configured to work with your enterprise standard LLM such as OpenAI (ChatGPT).

No matter how good the GenAI output is, having “Human in the loop” review is critical to generating correct and complete codebase documentation. Our NonStop specialists validate each output type, ensuring accuracy across documentation, conversational responses, and visual representations while maintaining AI speed and efficiency.

Navigator’s Outputs

Navigator produces comprehensive documentation that transforms NonStop code into accessible business intelligence. This includes program functionality explanations, business rule documentation, and system architecture overviews that capture the “why” behind the code—preserving critical institutional knowledge.

The chat capability creates an intelligent assistant that answers complex system questions in real-time using natural language. Users can ask “How does the credit approval process work?”

Navigator automatically generates visual representations including data flow diagrams, business process flowcharts, and others. These visualizations provides additional insight into the underlying code logic that are useful in impact analysis, troubleshooting, and modernization planning.

Together, these outputs create a comprehensive knowledgebase beyond just plain code documentation. It provides depth, chat interaction, and visual diagrams to ensure your NonStop knowledge is accessible organization wide.

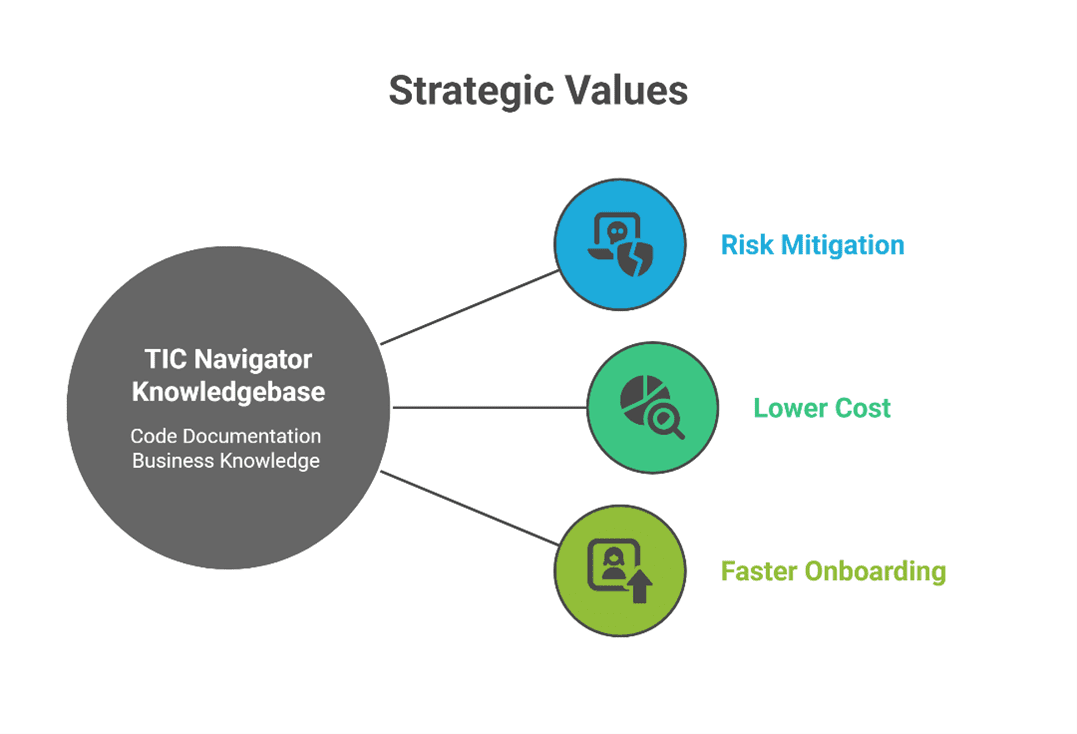

Strategic Values

By transforming implicit system knowledge into explicit, accessible documentation, Navigator delivers measurable business value across multiple dimensions. Organizations implementing comprehensive knowledge preservation strategies will see immediate operational improvements such as developer productivity and quicker support turnaround.

Navigator also provides long-term strategic advantages. Navigator-based Knowledge repositories protect against knowledge loss by capturing complex business logic and system behavior that are quickly accessible.

Navigator also provides long-term strategic advantages. Navigator-based Knowledge repositories protect against knowledge loss by capturing complex business logic and system behavior that are quickly accessible.

It lowers support costs through reducing problem escalations and external consultant engagements while enabling smaller teams to manage the systems.

New hires can quickly contribute to meaningful work rather than spending months in observation roles with limited productivity. This accelerated timeline addresses the critical skills gap as experienced developers retire, ensuring continuity of operations.

Conclusion: Future-Proofing NonStop

Future-proofing your NonStop systems means more than simply keeping applications running on the latest hardware— It is about ensuring your systems remain viable, maintainable, and valuable for years to come. Achieving this requires not only ongoing support, but also preserving and unlocking the decades of business knowledge embedded in your code, so you can build a truly modernized future.

Navigator demonstrates how GenAI can directly address these challenges through comprehensive knowledge extraction and preservation. With Navigator, you can ensure business continuity, reduce operational risks, and create a foundation for future modernization efforts—all while maintaining the exceptional TCO that makes NonStop systems so valuable.

To learn more please visit us at https://TICSoftware.ai

Contact us via email: sales-support@ticsoftware.com

Contact us via email: sales-support@ticsoftware.com

Be the first to comment