Introduction

I worked for Tandem/Compaq/HP for 20 years. After the Compaq acquisition of Tandem Computers and the HP acquisition of Compaq, I removed the word Tandem from my vocabulary. Since leaving HP, I joined US Foods, and at US Foods, the NonStop systems are still referred to as the Tandem Systems, and I am on the Tandem Technical Engineering Team. As a result, I am back to using the words NonStop and Tandem interchangeably.

Application Modernization at US Foods

Like most companies, US Foods has engaged in application modernization for many years. New GUI front ends have been developed to give a modern look and feel to applications that use our NonStop systems as the backend. The NonStop systems remain the systems of record. Through the years, US Foods has engaged TIC Software to research, purchase, and implement software to aid in our application modernization goals.

We have implemented several application interfaces that use the message–response method.

- When I joined US Foods, the main interface to connect the GUI to the NonStop was NonStop Java Server Pages (NS JSP). We still have a few applications using that method.

- We then developed a few applications using SQL/MX Connection Services.

- Attunity Connect then became the focus until they ceased support of NonStop.

- For several years now, we have been using a REST interface provided by LightWave Server from NuWave Technologies. We currently have 111 LightWave Server Services in production.

- When the need arose for the NonStop to act as a client, we employed LightWave Client from NuWave and had 12 LightWave Client interfaces in production.

Kafka at US Foods

Several non-Tandem related applications at US Foods have started using a publish-subscribe solution called Kafka to communicate in the past couple of years. Applications can communicate with each other by publishing to and consuming from Kafka topics.

“Apache Kafka is an event streaming platform used to collect, process, store, and integrate data at scale. It has numerous use cases, including distributed streaming, stream processing, data integration, and pub/sub messaging.” (1)

uLinga for Kafka for NonStop

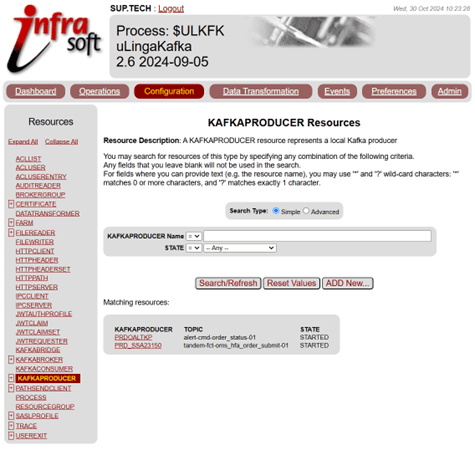

US Foods purchased uLinga for Kafka from Infrasoft when it became necessary for the NonStop to participate in the Kafka arena. With the Kafka setup already in place, we successfully published from the NonStop to a Kafka topic during our first meeting with TIC and Infrasoft. At the time of this writing, we have 2 Kafka producers in production and several producers and consumers in the development phase. uLinga features an easy-to-understand GUI that one can use to configure and monitor uLinga for Kafka. There is also a robust command interface. The illustration below depicts the GUI.

There are three distinct advantages to utilizing Kafka as the interface between two applications.

- The two applications do not have to wait on each other to do their portion of the interaction. The Producer produces the messages into the Kafka topic and moves on. It is not affected by how long it takes consumers to process that message.

- If there is more than one consumer that needs to process a message, the producer still just produces one message, and all the consumers that are monitoring that topic will receive the message.

- The Producer can still write to the topic even if a consumer service is down at the time. The consumer service will receive all the messages it missed once it is started and monitors the topic again.

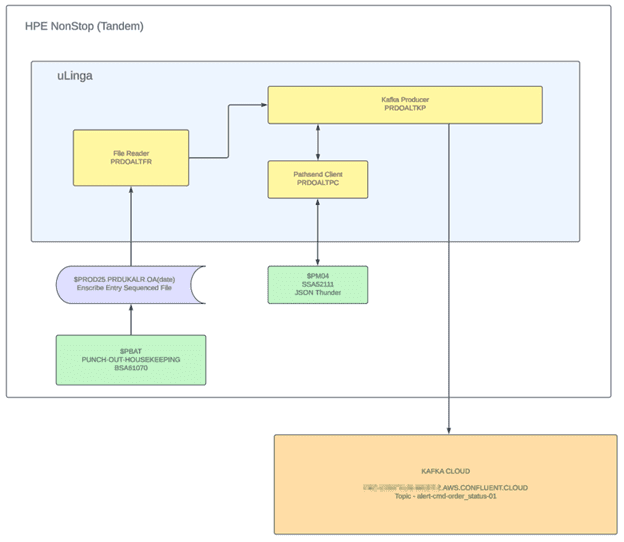

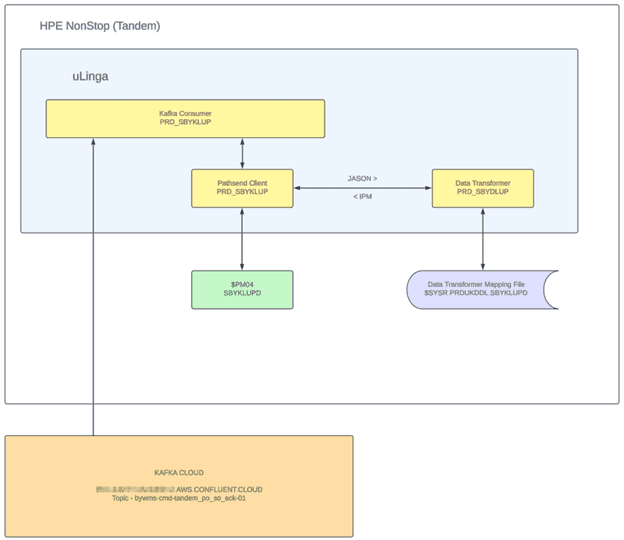

Below are descriptions and illustrations of a Kafka Producer and a Kafka Consumer in use at US Foods. If you refer to the illustrations while reading the explanation, the interactions should become clear.

Order Alert Kafka Producer Implementation

Our Order Alert implementation utilizing a Kafka Producer is depicted below.

- First, a Netbatch job kicks off. The Netbatch job compares each order to what has been shipped. When differences are detected, it writes records in an entry-sequenced Enscribe file. A uLinga File Reader picks up those records.

- The File Reader passes the record to the uLinga Producer.

- The Producer passes the record to the uLinga Pathsend Client.

- The Pathsend Client then passes the record to a Pathway Server.

- That Pathway Server obtains all the information about the order from the database and then invokes JSON Thunder from Canam Software to convert the alert buffer into a JSON message.

- The JSON message then returns to the Kafka Producer and the Kafka Producer writes that JSON message to the Topic in the Kafka Cloud.

At that point, all the applications that need that JSON message and have Kafka Consumers configured to watch that topic receive the message and act on it. As shown in the next example, the JSON Thunder portion of this solution could be replaced by the Data Transformer feature that is available in uLinga.

Warehouse Update Kafka Consumer Implementation

One of our Kafka Consumers is illustrated below.

- The uLinga Kafka Consumer monitors a topic in the Kafka Cloud. When the Consumer receives a JSON message, it passes it to the uLinga Pathsend Client.

- That Pathsend Client forwards the JSON message to the uLinga Data Transformer.

- The Data Transformer utilizes a mapping file to transform the message from a JSON message to a Pathway Server IPM.

- That IPM is returned to the Pathsend Client, and it sends that message to the Pathway Server for processing.

Naming conventions and TACL automation

The functions of uLinga (File Reader, Producer, Consumer, Pathsend Client, etc.) mentioned above are given a name as you configure them. You can start, stop, status, and abort them by name using the GUI or the command line interface. The names can be quite long and can document the specific function.

At US Foods, we have landed on a naming convention of naming each function that makes up a solution the exact same name. In the Kafka Consumer illustration above, the Kafka Consumer, Pathsend Client, and Data Transformer all have the same name. That makes it clear in the GUI and the command line what functions are related to each other.

After landing on that naming convention, I created macros that accept a parameter of the name. For instance, when I want to stop the entire solution of the Kafka Consumer illustrated above, I invoke a macro from a TACL prompt “ULSTOP PRD_SBYKLUP”. The macro will perform a “STOP FILEREADER PRD_SBYKLUP” if one by that name exists, a “STOP KAFKAPRODUCER PRD_SBYKLUP” if one by that name exists, a “STOP KAFKACONSUMER PRD_SBYKLUP” if one by that name exists, and finally a “STOP PATHSENDCLIENT PRD_SBYKLUP” if one by that name exists.

We also monitor uLinga using a REST message from our production monitoring software called New Relic.

Conclusion

US Foods is quite happy with the uLinga product. We receive great support from TIC and Infrasoft. Several of our wishes have been added to the product as enhancements. US Foods appreciates that uLinga for Kafka is a Guardian-based product and that it uses Guardian credentials for access. Also, as a long-time Tandem / NonStop person, it gives me confidence in the future of NonStop when the answer to the question “Can the Tandem system even participate in the Kafka Cloud?” and the answer is “Absolutely we can!”

Be the first to comment