Looking back at 2021, cybersecurity remains one of the biggest and most prevalent topics in the IT industry. One security aspect has crept to the forefront in recent months – Zero Trust (ZT).

ZT is not a product or a technology. ZT is a security concept or a methodology. It is platform-agnostic, which means conversely that it applies to any hardware platform, including HPE NonStop.

The main concept behind zero trust is “never trust, always verify,” which means that devices should not be trusted by default. Even if they are connected to a managed corporate network such as the corporate LAN and even if they were previously verified. In most modern enterprise environments, corporate networks consist of many interconnected segments, cloud-based services and infrastructure, connections to remote and mobile environments, and increasingly connections to non-conventional IT, such as IoT devices. The once traditional approach of trusting devices within a notional corporate perimeter, or devices connected to it via a VPN, makes less sense in such highly diverse and distributed environments. Instead, the zero trust approach advocates mutual authentication, including checking the identity and integrity of devices without respect to location and providing access to applications and services based on the confidence of device identity and device health in combination with user authentication.[1]

ZT even found its way into Joe Biden’s Executive Order on Improving the Nation’s Cybersecurity from May 2021:

The Zero Trust security model eliminates implicit trust in any one element, node, or service and instead requires continuous verification of the operational picture via real-time information from multiple sources to determine access and other system responses. In essence, a Zero Trust Architecture allows users full access but only to the bare minimum they need to perform their jobs. If a device is compromised, zero trust can ensure that the damage is contained. The Zero Trust Architecture security model assumes that a breach is inevitable or has likely already occurred, so it constantly limits access to only what is needed and looks for anomalous or malicious activity. Zero Trust Architecture embeds comprehensive security monitoring; granular risk-based access controls; and system security automation in a coordinated manner throughout all aspects of the infrastructure in order to focus on protecting data in real-time within a dynamic threat environment.

Zero Trust Architecture embeds comprehensive security monitoring; granular risk-based access controls; and system security automation in a coordinated manner throughout all aspects of the infrastructure in order to focus on protecting data in real-time within a dynamic threat environment. This data-centric security model allows the concept of least-privileged access to be applied for every access decision, where the answers to the questions of who, what, when, where, and how are critical for appropriately allowing or denying access to resources based on the combination of server.[2]

The data-centric approach is key to a ZT architecture, and comforte has advocated data-centric security for quite some time. The following paragraphs look at some of the hurdles (or myths) that people need to overcome to implement data-centric security.

4 Myths About Data-centric Security on the Journey to Zero Trust (written by Trevor J Morgan[3])

If you’ve been tracking along with this entire blog series, you know that I’ve covered a lot of ground, both technical- and business-wise. I started with the fact that Zero Trust (ZT) is a set of principles, not a formalized and codified technical standard. Lots of different people and organizations have weighed in on what ZT is, the most relevant guidelines to follow, and the overall value of ZT to any organization wanting to implement it.

What ZT means for you and your organization is something that only you can determine. Of course, cybersecurity vendors and experts are happy to help, but because no single silver bullet can cover an entire ZT reference architecture or the broader IT infrastructure, you’ll need to understand each vendor’s narrow expertise within the larger universe of cybersecurity. This is a pretty big topic when you get into the weeds.

In the first post in this series, we covered the high-level principles that ZT comprises. I called out one of these as a critical point to remember: ultimately the most important asset to protect is your enterprise data, not the IT environment that houses and supports it.

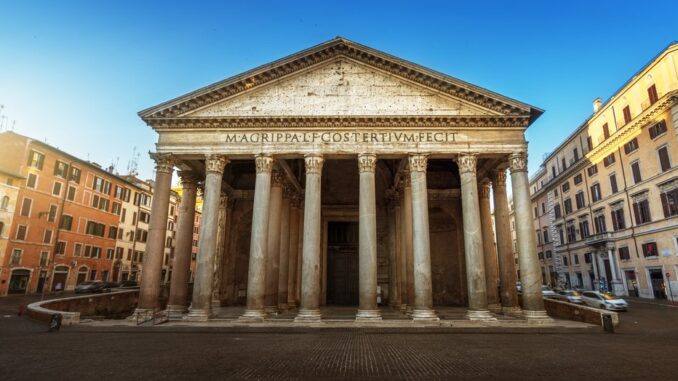

The second post picks up on this critical notion and provides data points supporting the claim that data is the primary focus of threat actors, not your IT resources or anything else within the infrastructure. If they care about a router or a server, they only do so because it is a stepping stone to get to your sensitive and highly valuable data. I introduced the idea of data as the pediment (crowning feature) of a classical structure, not just a supporting pillar. My rendition of the pillared structure is a modification of the standard pillars of ZT that you’ll encounter in many of the explanatory reference documents covering the topic.

Rather than deal with all the different types of cybersecurity encompassed by the many ZT pillars, I narrow the focus on data. Post #3 addresses data-centric security, which includes protection methods applied directly to your data instead of the surrounding borders and perimeters. To emphasize just how important data protection is, I included an analogy of a medieval castle and the way that data-centric security such as tokenization can thwart attackers’ efforts in the same way that a secret passage would have whisked away the King and Queen from the throne room under attack. I know, any analogy falls a bit short technically speaking, but a medieval castle includes similar protection methods you see in a current IT infrastructure: perimeter walls and borders, intrusion detection mechanisms, checkpoints and choke points, and identity verification points all throughout.

In my last entry, post #4, I suggest data protection as the most logical starting point for a ZT initiative and use a cost-benefit illustration to support my suggestion. At comforte, we’ve helped customers reduce their expenditures significantly while achieving a very granular level of control over sensitive data access. I actually cite one of those examples in the blog post.

No good idea exists without pushback, and the idea that a ZT implementation should start with data-centric protection does generate resistance. That’s only natural. What I want to do now is quickly address a few of these claims, or rather myths, and provide a response in support of data-centric security such as tokenization as the natural starting point for any ZT initiative.

Myth #1: Data-centric security is too expensive

The goal of a ZT initiative is to eliminate implicit trust across the IT infrastructure by using tools and processes to control, challenge, and authenticate data and resource requests. The more granular you wish to be, the more expensive this proposition becomes. For example, segmenting the network into smaller and smaller zones so that the level of granular control is incredibly fine means an enormous expenditure in network equipment and monitoring capabilities and a corresponding increase in operational complexity. For a sizeable enterprise infrastructure, this type of effort can cost many millions of dollars just in the initial investment, not to mention the operational cost increases. By comparison, our data-centric security platform costs a fraction of that for granularity of control down to the data element (obfuscating even small portions of sensitive data). We have anecdotal evidence that our platform can be half as much as a single phase of network segmentation, if not less. With that type of savings, I would say that it bears investigation and some comparative shopping.

Myth #2: Data-centric security is too difficult to implement

One of the earlier claims against data-centric security such as tokenization is that it can be very difficult to implement. Depending on the platform and how it folds into an application environment, this claim might still be true. However, with comforte’s data-centric security platform, we’ve seen implementations completed in weeks to months, not months to years as can be the case with other data security platforms in large-scale enterprise environments.

We bring four major differentiators to the table. First, our platform integrates transparently and can secure data on the fly for file and batch processes. Second, it can integrate with business applications without the need to change the record format of the original data, which is especially helpful for running workloads and analytics on production data. Third, it supports modern micro-service architectures for applications running in modern cloud environments, container workload ecosystems, or private cloud/Kubernetes platforms. And finally, our easy-to-use APIs integrate with any common language or script.

Myth #3: Data-centric security is too difficult to operate and maintain

Enterprises typically deliver tokenization as a core service within the business with extremely high service levels internally (six 9s or more), in order to support aggressive service levels with the enterprises’ partners and 3rd party data processors. Downtime is simply not an option, nor is the inability to scale to emerging market requirements on a rapid, agile, and automation-driven basis. Similarly, traditional tokenization requires a ‘peak load’ architecture that suffers from overprovisioned resources, costs, and reserved compute. More modern architectures permit cost and performance scaling dynamically in real time, with robotic and increasingly intelligent automation strategies. Our data security platform follows a modern Infrastructure as Code model with a modern fault-tolerant, cloud-ready architecture enabling process automation, robotic management, and machine-readable input configuration and outputs.

Myth #4: Data-centric security isn’t comprehensive enough

Data protection methods such as tokenization are just part of the overall workflow. Other data security platforms may not help you upstream to discover and understand data within your environment, which leads to protection that is not comprehensive across all your data. Our platform provides data discovery and data classification capabilities so that you can first find sensitive data, understand its lineage (who accesses it, who uses it), and then apply the right protections. We say it often around here, but I will say it again: you can’t protect what you don’t know exists!

Most things worth doing can’t be accomplished all at once. This is the reason that software companies use the term journey to describe what their customers are experiencing as they engage in long-term technology initiatives. While it may be a bit cliché, I will go ahead and appropriate the term nonetheless. You’re trying to figure out whether Zero Trust is something that your organization should and can accomplish. You’re trying to determine what the steps are in the process and more importantly where to start this whole thing. And you’re trying to see where this whole initiative can take your organization, both in terms of risk reduction and regulatory compliance that can offset the effort and expenses to implement it.

In truth, this whole Zero Trust process is very much a journey and one you’ve never traveled before, but it’s not one you have to travel alone without a clear map showing the way forward. While we’re a software vendor that’s partial to the power and utility of our own enterprise data security platform, we’re willing to have those open and honest discussions about where you are in that journey—if you’ve even started it—and whether it makes sense to start with data-centric security.

[1] https://en.wikipedia.org/wiki/Zero_trust_security_model ↑

[2] https://www.whitehouse.gov/briefing-room/presidential-actions/2021/05/12/executive-order-on-improving-the-nations-cybersecurity/ ↑

[3] https://insights.comforte.com/4-myths-about-data-centric-security-on-the-journey-to-zero-trust ↑

Be the first to comment